Hands-On Unsupervised Learning Algorithms with Python

- Samul Black

- Dec 20, 2023

- 5 min read

Updated: Jul 19, 2025

Explore hands-on unsupervised learning with Python using real examples. Learn clustering, dimensionality reduction, and data visualization techniques. In this hands-on tutorial blog, we’ll explore the most commonly used unsupervised learning techniques—like clustering, dimensionality reduction, and data visualization—using Python libraries such as Scikit-learn, Matplotlib, and Seaborn. Whether you're working with customer data, image embeddings, or text vectors, this guide will show you how to implement practical models that make sense of raw data without labels. Let’s dive into the code and bring your datasets to life.

What is Unsupervised Learning?

Unsupervised Learning is a core branch of machine learning that deals with finding hidden patterns or structures in unlabeled data. Unlike supervised learning—where models are trained using labeled input-output pairs—unsupervised learning algorithms work without predefined outcomes. The model tries to make sense of the data by grouping, compressing, or discovering relationships entirely on its own.

This type of learning is especially useful in situations where labeled data is scarce or expensive to obtain. For example, you might want to analyze customer behavior, detect anomalies in system logs, or reduce the dimensionality of complex datasets—all without needing human-annotated labels. Key characteristics of unsupervised learning include:

No Labels Required: The data does not come with target values or categories.

Pattern Discovery: The model identifies trends, clusters, or structures inherent in the data.

Exploratory by Nature: Ideal for discovering insights when you don't know what to expect from the data.

Common Types of Unsupervised Learning & Use Cases

Unsupervised learning techniques help uncover hidden patterns in unlabeled data. Below are the most common types, along with their practical use cases.

1. Clustering - Groups similar data points based on feature similarity.Examples: K-Means, DBSCAN, Hierarchical Clustering.

2. Dimensionality Reduction - Compresses high-dimensional data while preserving essential structure.Examples: PCA (Principal Component Analysis), t-SNE, UMAP.

3. Association Rule Mining - Discovers relationships between variables in large datasets.Example: Apriori algorithm (used in market basket analysis).

4. Anomaly Detection - Identifies data points that significantly differ from the norm.Examples: Isolation Forest, One-Class SVM.

5. Density Estimation - Estimating the probability distribution of the data, useful in generative modeling, anomaly detection, and understanding data distributions for statistical analysis.

6. Generative Modeling - Creating models that can generate new data resembling the input data's distribution. Applications include generating synthetic data for training models, image generation, and natural language processing.

7. Data Preprocessing - Unsupervised techniques can help in preprocessing steps, like imputing missing values, scaling features, or handling noisy data before supervised learning.

8. Market Segmentation - Given the historical market analysis data, these techniques could help in Identifying potential targets and grouping customers based on purchasing behaviour or demographics.

9. Image Segmentation - Partitioning an image into regions with similar characteristics or even identifying certain region from a given image. These techniques have proven to be very useful in classification and recognition based models.

10. Document Clustering - A large corpora of textual data could be organised into different known or unknown categories , may it be given topics or no categories at all.

List of Unsupervised Learning Algorithms

Unsupervised machine learning algorithms learn the patterns and different relationships between feature set itself. These kind of algorithms are defined by there use of unlabelled data. An unlabelled data is a dataset that contains a lot of examples of Features and Target for these features is not present. unsupervised learning uses algorithms that learn the structure, inside relationship and commonalities of Features from the dataset. This process is referred to as Training or Fitting. A bunch of such unsupervised learning algorithms are given below:

K-Means Clustering

Hierarchical Clustering (Agglomerative/Divisive)

DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

Mean Shift Clustering

Gaussian Mixture Models (GMM)

OPTICS (Ordering Points To Identify the Clustering Structure)

PCA (Principal Component Analysis)

t-SNE (t-distributed Stochastic Neighbor Embedding)

UMAP (Uniform Manifold Approximation and Projection)

Autoencoders

Factor Analysis

Apriori Algorithm

ECLAT Algorithm (Equivalence Class Clustering and bottom-up Lattice Traversal)

FP-Growth (Frequent Pattern Growth)

Isolation Forest

One-Class SVM (Support Vector Machine)

Local Outlier Factor (LOF)

Implementing Few Unsupervised Learning Algorithms with Python

Python offers powerful libraries like Scikit-learn, NumPy, and Matplotlib that make it easy to implement unsupervised learning algorithms. In this section, we’ll walk through practical examples of clustering, dimensionality reduction, and anomaly detection using real-world datasets.

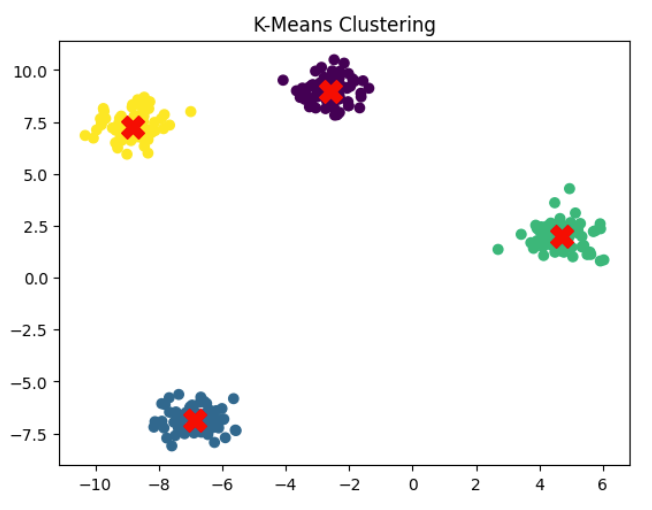

1. K-Means Clustering with Python

K-Means is a popular clustering algorithm that partitions data into k distinct groups based on feature similarity. It’s simple, fast, and effective for discovering structure in unlabeled datasets.

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

X, y = make_blobs(n_samples=300, centers=4, cluster_std=0.6, random_state=42)

kmeans = KMeans(n_clusters=4, random_state=42)

y_kmeans = kmeans.fit_predict(X)

plt.scatter(X[:, 0], X[:, 1], c=y_kmeans, cmap='viridis')

plt.scatter(kmeans.cluster_centers_[:, 0], kmeans.cluster_centers_[:, 1], s=200, c='red', marker='X')

plt.title("K-Means Clustering")

plt.show()Output:

2. DBSCAN (Density-Based Spatial Clustering) Clustering with Python

DBSCAN groups together points that are closely packed, while marking points that lie alone in low-density regions as outliers. It’s ideal for non-linear clusters and noisy datasets.

from sklearn.cluster import DBSCAN

from sklearn.datasets import make_moons

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

X, _ = make_moons(n_samples=300, noise=0.05, random_state=42)

X = StandardScaler().fit_transform(X)

dbscan = DBSCAN(eps=0.3, min_samples=5)

labels = dbscan.fit_predict(X)

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='plasma')

plt.title("DBSCAN Clustering")

plt.show()Output:

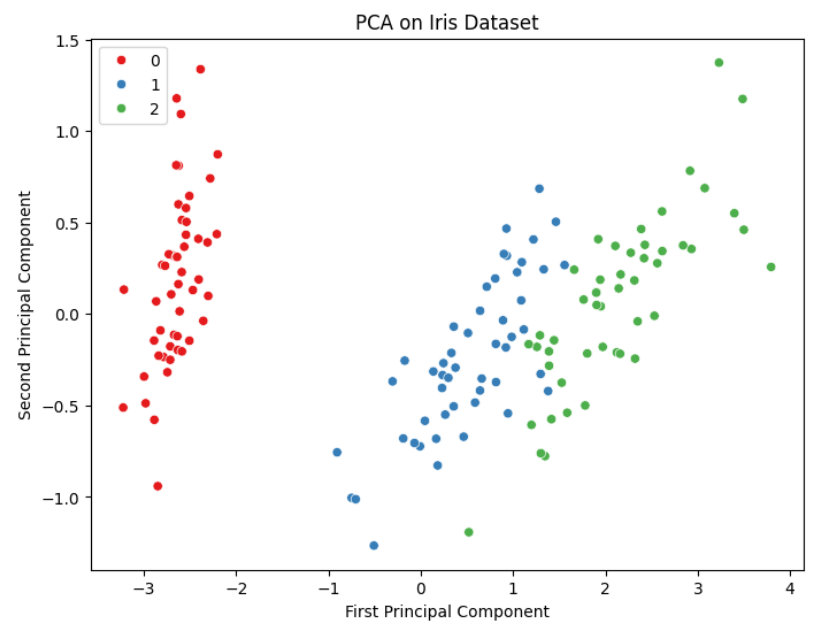

3. PCA (Principal Component Analysis) with Python

PCA is a dimensionality reduction technique that transforms high-dimensional data into fewer dimensions while preserving as much variance as possible. It’s often used for visualization or preprocessing.

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

import seaborn as sns

iris = load_iris()

X = iris.data

pca = PCA(n_components=2)

X_pca = pca.fit_transform(X)

plt.figure(figsize=(8, 6))

sns.scatterplot(x=X_pca[:, 0], y=X_pca[:, 1], hue=iris.target, palette='Set1')

plt.title("PCA on Iris Dataset")

plt.xlabel("First Principal Component")

plt.ylabel("Second Principal Component")

plt.show()Output:

4. Isolation Forest (Anomaly Detection) with Python

Isolation Forest is an unsupervised anomaly detection algorithm that isolates observations by randomly selecting a feature and splitting the data. It works well for detecting outliers in high-dimensional datasets.

from sklearn.ensemble import IsolationForest

import numpy as np

rng = np.random.RandomState(42)

X_inliers = 0.3 * rng.randn(100, 2)

X_outliers = rng.uniform(low=-4, high=4, size=(20, 2))

X = np.r_[X_inliers + 2, X_inliers - 2, X_outliers]

clf = IsolationForest(contamination=0.1, random_state=42)

y_pred = clf.fit_predict(X)

plt.scatter(X[:, 0], X[:, 1], c=y_pred, cmap='coolwarm')

plt.title("Anomaly Detection using Isolation Forest")

plt.show()Output:

5. Hierarchical Clustering (Agglomerative) with Python

Hierarchical clustering builds nested clusters by successively merging or splitting them. Agglomerative clustering is a "bottom-up" approach that is useful for discovering hierarchy in data.

from sklearn.datasets import make_blobs

from sklearn.cluster import AgglomerativeClustering

X, _ = make_blobs(n_samples=150, centers=3, cluster_std=0.7, random_state=42)

model = AgglomerativeClustering(n_clusters=3)

labels = model.fit_predict(X)

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='Accent')

plt.title("Agglomerative Hierarchical Clustering")

plt.show()Output:

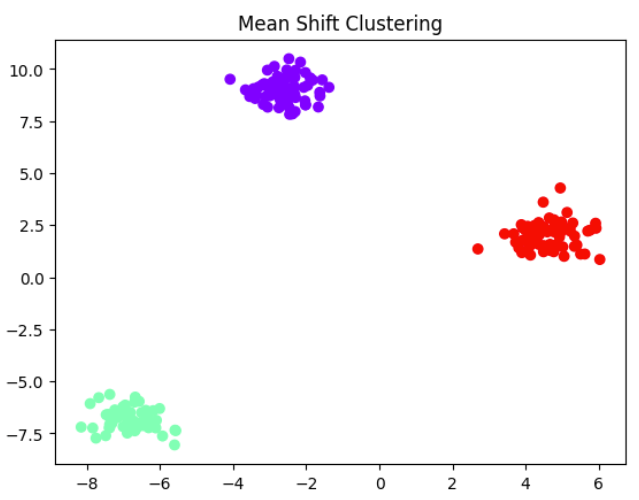

6. Mean Shift Clustering with Python

Mean Shift is a centroid-based algorithm that updates candidates for centroids to be the mean of the points within a given region. It does not require specifying the number of clusters beforehand.

from sklearn.cluster import MeanShift

X, _ = make_blobs(n_samples=200, centers=3, cluster_std=0.6, random_state=42)

meanshift = MeanShift()

labels = meanshift.fit_predict(X)

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='rainbow')

plt.title("Mean Shift Clustering")

plt.show()Output:

Conclusion

Unsupervised learning is a powerful branch of machine learning that unlocks hidden patterns, clusters, and structures in unlabeled data. From K-Means and DBSCAN to advanced techniques like PCA, t-SNE, and GMM, these algorithms play a vital role in real-world applications such as customer segmentation, anomaly detection, recommendation systems, and data visualization. With Python’s robust ecosystem of libraries like Scikit-learn, Seaborn, and UMAP, implementing and experimenting with these algorithms becomes both accessible and insightful. Mastering these tools not only enhances your data science capabilities but also lays the foundation for deeper machine learning exploration.

💬 Get Expert Help with Machine Learning Projects

Are you a student tackling a course assignment, a researcher implementing advanced models, or a developer building AI-powered applications? Expert guidance can accelerate your learning and save valuable time. At ColabCodes, we offer:

1:1 coaching for hands-on Python & ML support

Help with clustering, dimensionality reduction, and other unsupervised techniques

Research consultation for academic or applied machine learning projects

Debugging and performance tuning assistance

Academic assignment help

Connect with machine learning mentors who bring both academic knowledge and real-world experience.

📩 Email : contact@colabcodes.com or visit this link for a specified plan.

📱 Whatsapp : +918899822578